GPT-4o, a Real-Time Multimodal Model

« Discover GPT-4o, OpenAI's new flagship model. It analyzes audio, vision and text in real time, for increasingly natural interaction with AI »

GPT-4o, a Real-Time Multimodal Model

OpenAI, the leader in artificial intelligence, has just announced a major breakthrough with GPT-4o, its new flagship model capable of reasoning in real-time on audio, vision, and text. This advancement marks a giant step towards more natural and intuitive collaboration between humans and machines.

GPT-4o: ChatGPT can see, hear and understand

GPT-4o stands out for its ability to accept any combination of text, audio, and image as input, and generate text, audio, or images as output. With an average response time of 320 milliseconds, similar to human response time in a conversation, this new model offers a smooth and natural interaction experience.

Exceptional Performance in Multiple Domains

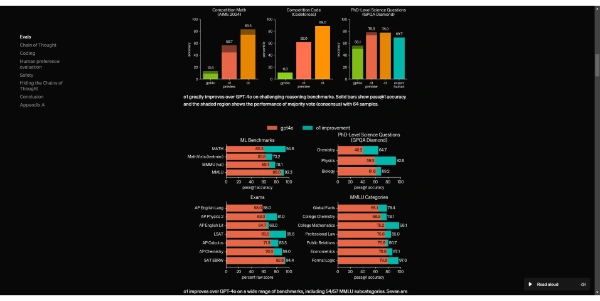

Not only does GPT-4o match the performance of GPT-4 Turbo on English text and code, but it also brings significant improvement on text in non-English languages. Moreover, it excels in understanding vision and audio compared to existing models.

A Faster, Cheaper, and More Accessible Model

GPT-4o is 2 times faster, 50% cheaper, and offers 5 times higher rate limits than GPT-4 Turbo in the API. This increased accessibility will allow more developers to create innovative AI-based applications.

The Future of Human-Machine Interaction with OpenAI

With GPT-4o, OpenAI paves the way for a new era of artificial intelligence, where machines are capable of understanding and communicating more naturally with humans. This advancement promises to revolutionize many fields, from customer service to content creation, education, and research.

Review and Conclusion About GPT-4o

In conclusion, GPT-4o represents a crucial step in the evolution of AI and ChatGPT, offering unprecedented possibilities for real-time multimodal interaction. With this new model,OpenAI confirms its pioneering role in the artificial intelligence sector and opens up exciting new perspectives for the future of technology.

You May Also Like